Istio is one of the many service mesh implementations out there. There is a very nice overview of the different service mesh implementations by INNOQ. Like all service meshes Istio tries to move some common network functionality into the infrastructure layer like mutual transport layer security (mTLS), traffic shifting, resilience features and access control. Istio also allows some more “complex“ features like Multicluster and multi mesh, which basically means Istio allows operators to create multiple meshes that can talk with each other or span a mesh across multiple Kubernetes clusters. In this blog post we will take a deeper look how we can use Istio multicluster for transparent fail overs.

What is Multicluster?

The Multicluster concept in Istio brings the ability to span a Mesh over multiple Kubernetes clusters. This can be helpful for various reasons such as:

- Hybrid- or Multi-Cloud

- Failure tolerance

- Security (e.g. each Team has its own cluster)

- Costs

- Performance

There are different flavours of Multicluster and some of these flavours can be mixed. This Blog Post by IBM describes the different flavours and how they can be used. Also on the official Istio docs there is an installation guide for all these flavours. For the rest of this blog post we will assume that we have a replicated control plane which means all clusters (and the Istio installations) are completely decoupled and the only requirement is a common root CA for the clusters.

Why Transparent Multicluster?

In the default setup of Multicluster you will install the Istio CoreDNS component which will resolve a custom DNS subdomain (the default here is “global“). When all the required components are set up a service can resolve “a.foo.global“ and would be routed to an endpoint in the same cluster or a remote cluster.

In one use case our developers wanted a transparent failover of their services. So they didn’t want to call a service like “a.foo.global“ just the normal Kubernetes service names like “a.foo.svc.cluster.local“ and if their service was available in the cluster the call should be routed to the in-cluster endpoints, otherwise the request should be routed to an external cluster.

There are some benefits and drawbacks to this approach:

Benefits

- No Istio CoreDNS required (one less component)

- No (external) IP management required (we should ensure that the IP addresses are unique)

- Developers don’t need to adjust anything

- If a service is not available in the cluster a failover will be made to a remote service

Drawbacks

- Intransparent when a failover will be done

- You need to set the correct region/zone labels on your nodes and ServiceEntries

How it Works with Istio

The prerequisites for Istio Multicluster can be found on the official docs. Assuming that your clusters and Istio are set up as described in the official documentation you first need to adjust the multicluster gateway to allow multicluster calls for “*.svc.cluster.local“. The next steps are pretty similar to the default setup of multicluster: we need to create a so called ServiceEntry for the remote cluster which allows Istio to add the Gateway of the remote cluster as an endpoint for the service.

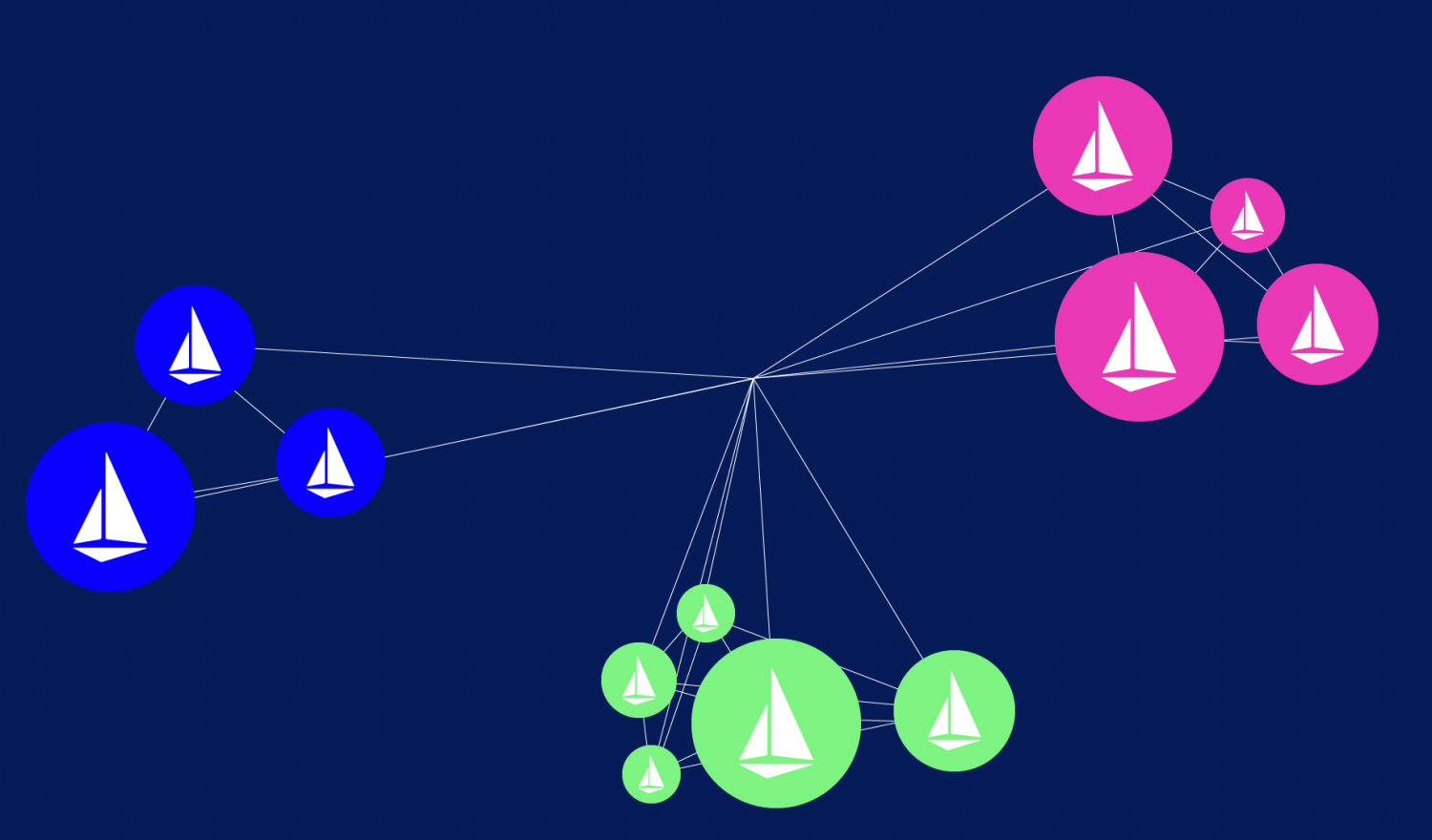

The screenshot below shows how Istio makes sure the ServiceEntry is part of the endpoint list of the service. Since Istio doesn’t have any further information about the gateway referenced in the ServiceEntry we need to specify the location. In our example europe-west1/europe-west1-b this information is used for the locality load balancing. These are the only changes required to make the transparent failover work. As a small side note, if you try the example you will notice that the locality load balancing is only working if an outlier detection is specified for the called service.

Here’s my complete walkthrough/demo on GitHub.

Challenges

One challenge is the load distribution since Istio only sees one endpoint and behind this gateway there could be n replicas of the service

As soon as you activate the *.svc.cluster.local wildcard for the multicluster gateway all services are exposed to the remote clusters. If this is unwanted behaviour you need to give Istio an Authorization Policy for all services.

You need to synchronize the services across the different clusters (and the service entries). This challenge is independent if you use transparent failover or not.

Transparent Failover and Multi Version

One of the benefits when using Istio is to use the traffic shifting feature which means we can route different requests to different versions of our application e.g. for blue-green or canary deployments. There is already a blog post about multicluster version routing but with a different focus. The basic idea is still the same as in the transparent failover scenario we just need to modify the labels of the service and accordingly adjust the DestinationRule. In the DestinationRule we define the possible subsets like version 1 of the service can be found by this selector.

Currently it’s only possible to failover to another cluster but it’s not possible to failover to another version of the service e.g. if the canary service is not available fallback to the default/stable version. This will be probably available in a future version of Istio since envoy support this feature with the aggregate cluster.

A full example on transparent Multicluster with multi version can be found on GitHub.

Recap

We have seen what steps are necessary to use Istio for transparent failover and how to apply multi version routing to it. There are still some gaps / challenges when you want to use Istio Multicluster setups (and especially if you want to use some functionality like transparent failover).

In our next blog post we will take a deeper look at Istio mesh expansion and how to integrate existing infrastructure into the Service Mesh.