TL;DR:

To make AI-assisted CLE effective in head and neck surgery, technology must be designed around real surgical workflows and user needs. This post outlines 5 key user-centered requirements based on shadowing and interviews with surgeons.

AI is changing the game in healthcare – and surgery is no exception. In complex anatomic areas like the head and neck, AI offers promising opportunities to enhance precision, support intraoperative decision-making, and ultimately improve patient safety. But for AI to deliver real value in the operating room, it must go beyond technical performance and be built around the people who use it.

This blog post kicks off a multi-part series that accompanies our interdisciplinary process of developing AI-assisted Confocal Laser Endomicroscopy (CLE) in head and neck surgery in collaboration with the Department of Otolaryngology, Head and Neck Surgery, at Helios HSK in Wiesbaden. From collecting real-world data and developing robust models to analysing the context of use, defining requirements and designing explainable interfaces surgeons can trust, we’re combining deep learning, clinical insight, and user-centered design.

To lay the foundation, we begin this series by examining the user perspective. This blog post shares key findings from a user study on AI-assisted CLE in head and neck surgery. Combining user shadowing with semi-structured interviews, we explored how surgeons interact with CLE technology and what conditions need to be met for AI to collaboratively support and extend surgical expertise. We condense our findings into five core requirements for developing user-centered AI within the context of CLE-assisted diagnostics in head and neck surgery.

Real-Time tissue imaging with CLE

Confocal Laser Endomicroscopy is an advanced imaging technique that allows real-time, high-resolution visualization of tissue on cellular level. Unlike traditional biopsy methods, which require a subsequent histopathological examination, CLE enables clinicians to observe tissue in vivo (“in the living“) during the procedure. This real-time imaging provides insights into the morphological characteristics of tissue, helping surgeons differentiate between healthy and malignant areas.

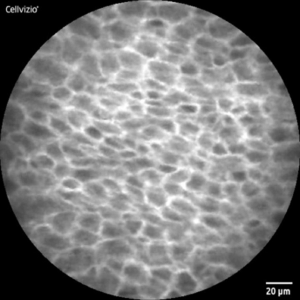

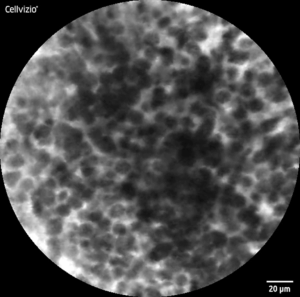

Healthy mucosa typically exhibits a regular, ‚cobblestone-like‘ pattern, each cell is unique, but overall, the image is uniform and consistent. Tumorous tissue, on the other hand, appears chaotic and irregular, with a disordered arrangement of cells in most cases. Beyond that, morphological features vary among different tumor entities.

CLE-Image of healthy (l) and malignant (r) tissue

Handling the probe and interpreting CLE images thus requires considerable expertise, limiting its widespread adoption in clinical practice. This is where artificial intelligence offers promising value. Deep learning models such as convolutional neural networks can be trained to recognize the same kinds of visual patterns that experienced surgeons rely on during diagnosis. By learning from a broad expert-annotated dataset of CLE images, deep learning models detect subtle structural deviations that indicate malignancy, distinguishing them from healthy tissue. In doing so, it can support surgeons in detecting tumors and delineating their margins more accurately.

While the transformative potential of AI-assisted CLE is undeniable, its effectiveness still depends on more than just the technology itself. For AI-assisted CLE to be effective, it must complement, not disrupt, the surgeon’s expertise and workflow. This is where a user-centric perspective becomes essential: understanding how surgeons interact with the technology, the challenges they face, and the context in which decisions are made ensures that AI solutions are not only technologically sound but also practically suitable.

Understanding surgeons‘ needs: User shadowing and interviews

To gather comprehensive data of how AI-assisted CLE could integrate into surgical practice to meet user needs, we combined two UX research methods: user shadowing and user interviews. User shadowing allowed us to follow surgeons directly in the operating room, observing their workflow and interaction with the CLE system. This revealed important usability challenges, decision-making patterns, and potential integration points for AI support in the surgical workflow.

Following the shadowing sessions, we conducted semi-structured interviews with experienced surgeons to dive deeper into their experiences and expectations, particularly by asking follow-up questions based on the observations from the shadowing. The combination of these methods allowed for connecting real-world behavior with detailed insights, ensuring a comprehensive understanding of how AI can enhance surgical practices without disrupting them.

Translating insights into actionable requirements

By combining observational insights from shadowing with the contextual depth of expert interviews, we uncovered not just how surgeons work but why they work the way they do. These findings highlighted recurring patterns, unmet needs, and specific usability barriers. To translate this knowledge into actionable outcomes, we synthesized the most critical aspects into five key requirements. They reflect the human-centered foundation for designing AI-assisted CLE tools that are usable, trusted, and embedded in clinical workflows.

Requirement 1: Enabling AI interaction under sterile conditions

To support surgeons without adding complexity, the effectiveness of AI-assisted CLE hinges on its seamless integration into the existing surgical workflow. Surgeons rely entirely on their hands during procedures, and sterile gloves further limit direct interaction with interfaces. This makes traditional touch-based, mouse or keyboard controls impractical and highlights the importance of hands-free interaction.

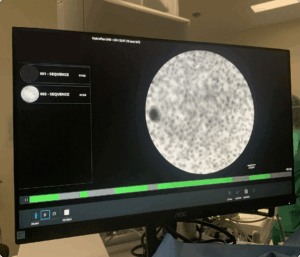

Current CLE interfaces are static, displaying real-time cellular imaging in a fixed circular format with no interactive functionality. However, surgeons already use foot pedals to start video recordings, demonstrating an established hands-free control method. Building on this, alternative interaction approaches such as expanding foot pedal functionality, voice commands, or gesture recognition could provide additional ways to adjust AI settings, toggle overlays, or refine diagnostic views.

By aligning AI-assisted tools with the realities of the surgical environment, such interaction methods could enhance decision-making without adding cognitive or ergonomic strain allowing surgeons to remain fully focused on the procedure.

Requirement 2: Millimeters matter

In head and neck surgery specifically counts: Precision matters. Millimeters can make the difference between preserving vital structures and causing irreversible damage, especially regarding functional aspects such as speeking and swallowing. The region’s dense anatomical complexity with essential nerves and blood vessels in proximity leaves little room for error. While surgeons in other areas may operate with safety margins of several centimeters, in head and neck procedures, less than 10 mm often separates malignant tissue from vital structures making resections for histopathological analysis sometimes even impossible.

AI-assisted CLE needs to focus on precision and accuracy in tumor margin detection, to reduce the risk of both excessive and insufficient resection.

Requirement 3: Diagnostic reasoning is multifactorial

Intraoperative decision-making does not rely on a single input. Besides CLE-imaging, surgeons draw on multiple, concurrent information streams: macroscopic tissue appearance, manual palpation, patient history and tumor localization. Each modality contributes a unique perspective to form a reliable diagnosis.

Manual palpation allows surgeons to assess tissue consistency and texture to provide a binary sense of “tumor or not“ since malignant tissue feels firmer than surrounding healthy areas. Even among tumor entities, differences are palpable; for example, some lymphomas present a different tactile characteristic compared to carcinomas. This tactile feedback contributes to early intraoperative impressions and is a valuable source of non-visual information.

CLE, in turn, offers microscopic morphological detail, helping to identify healthy, dysplastic, and malignant regions. Dysplastic areas, in particular, are of special interest because they represent transitional zones at the tumor margins regions where formerly healthy cells are beginning to undergo abnormal changes. In these areas, cells are in the process of degeneration, signaling an early shift toward malignancy. Recognizing and assessing dysplasia is difficult during surgery, yet crucial for ensuring complete resection. An AI system designed to support CLE interpretation must therefore be aware of these nuances, helping to flag subtle changes.

As such, CLE imaging contributes important microstructural information, still it does not serve as the sole basis for clinical decisions. AI-assisted CLE must therefore be designed with this multifaceted diagnostic complexity in mind.

Requirement 4: Explainability is a key factor

To effectively support surgical decision-making, AI must not only deliver results. It requires systems that communicate their reasoning in ways that align with clinical thinking of its users.

Surgical diagnosis relies on established visual morphological patterns, making the clear representation of these criteria essential. AI explanations should highlight key morphological features without obscuring the original CLE image. By explicitly outlining the features that contributed to the AI’s decision, the system can help reinforce the surgeon’s own diagnostic reasoning, strengthening the trust in the AI’s conclusions.

One effective way to augment this visual clarity is through the use of color coding, providing immediate visual guidance in an otherwise grayscale interface. Consistency here is critical since color must be intuitive and unambiguous to prevent misinterpretation.

Notably, not only observing but also listening to surgeons during the user shadowing revealed important insights. One surgeon remarked while interpreting a CLE image: “All the lymphomas I’ve seen so far looked like this.“

This indicates that diagnostic certainty also stems from pattern recognition grounded in prior experience. Surgeons don’t just analyze images, they compare them, also subconsciously, to mental reference images of familiar cases. To support this reasoning strategy, integrating prototypical example images into the interface could enhance explainability.

When it comes to explainability in AI-assisted CLE, a key principle emerged from our study: augment and align. Rather than imposing abstract new heuristics, a better strategy is anchoring AI outputs to surgeons’ cognitive strategies and mental models to foster trust, improve usability, and increase acceptance in the surgical setting.

Requirement 5: Support might vary by experience – and must be ethical by design

The handling and interpreting of CLE imaging requires considerable expertise. Surgeons currently using this technology are highly experienced and confident in their interpretative skills. Less experienced practitioners such as early-career surgeons face a steep learning curve. An explanatory interface visualizing AI-assisted diagnoses can serve as an educational resource. Its effectiveness might depend on its ability to cater to users with varying levels of expertise and individual preferences.

What became apparent from the interviews: surgeons do not view AI as a threat but as a collaborative assistant and a tool to enhance surgical precision and improve patient safety.

Still, the role of the human expert remains central. Physicians see themselves as the final authority in any intraoperative decision. They seek AI as a supportive tool in a multifactorial diagnostic process and never blindly rely on it.

To ensure the correct use of AI-assisted CLE, training is essential. Surgeons want to be equipped with the knowledge to effectively incorporate AI into their practices. Patient consent is non-negotiable and must be ensured, with ethical considerations already addressed through the necessary ethical approval processes for clinical use.

Looking Ahead: Designing AI that works with surgeons

Summing it up, the successful integration of AI into surgical workflows is not just a technical challenge. It requires effective and thoughtful human–AI collaboration. Working closely together with surgeons further reinforced that human-centered development in AI is not just a nice-to-have. The value of AI in surgery will not only depend on what it could do, but on how well it aligns with real-world needs, constraints, and expertise of its users.

As the next iteration in this project, the insights of this requirements analysis will lay the foundation for my master’s thesis. After exploring what requirements need to be met when integrating AI into head and neck surgery with CLE, my research will further investigate how such a collaboration can be effectively realized.

How can interaction patterns be designed to be feasible and effective when hands are not free?

Which explainability methods actually build trust among clinicians and how should they be realized?

How can explainable interfaces be designed to fit intraoperative conditions?

These will be leading questions towards the overarching goal of developing practical, human-centered solutions that enable seamless collaboration between clinicians and AI during surgery, contributing to what’s most important: patient safety.