TL;DR:

This study demonstrates how deep learning can automate the interpretation of Confocal Laser Endomicroscopy (CLE) images in head and neck cancer. The AI reliably distinguishes healthy from tumorous tissue in real time, enhances surgical decision-making through transparent visualizations (CAMs), achieves high accuracy even in complex anatomical areas, and improves clinical usability and acceptance.

(This article is an excerpt of the master’s thesis of Stefanie Marx supervised by Department of Computer Science Faculty of Mathematics and Natural Sciences, University of Cologne, inovex GmbH and the Department of Otolaryngology, Head and Neck Surgery of Helios Dr. Horst Schmidt Kliniken Wiesbaden)

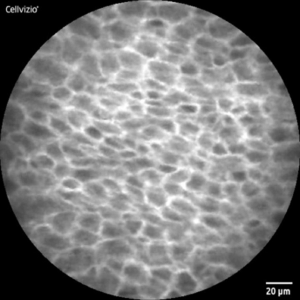

Detecting cancer early and precisely is critical for improving patient outcomes. Diagnosing and treating head and neck tumors are challenging for surgeons pathologists. Confocal Laser Endomicroscopy (CLE) is an emerging technology in this field that offers promising advantages. This high-resolution imaging technique allows clinicians to examine tissue at the cellular level in real-time – without the need for traditional biopsy followed by histopathological examination.

Still, a critical problem remains: interpreting CLE images requires substantial expertise and extensive training for medical professionals. This high barrier to entry limits the widespread adoption of this valuable technology in clinical practice.

In this master’s thesis, I investigated how Deep Learning (DL) can be applied to automate the classification of CLE images, supporting the diagnosis of head and neck tumors. The primary objective was to develop an AI-based system capable of reliably distinguishing between healthy and neoplastic (tumorous) tissue.

Dataset & Methodology:

To train and evaluate the performance of deep learning models, I used a dataset of 27,413 CLE images collected from 25 patients with histologically confirmed malignancies. These samples span a variety of anatomical regions in the head and neck area, including:

- Oral cavity

- Oro- and hypopharynx

- Sinunasal tumors

The inclusion of endonasal tumors is particularly noteworthy, as this region has rarely been considered in previous CLE classification research. Their presence significantly increases the complexity and diversity of the dataset, making it a more realistic and clinically relevant benchmark.

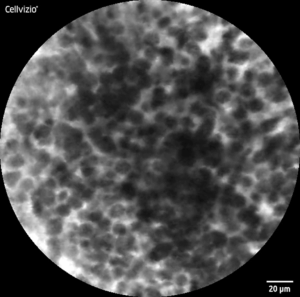

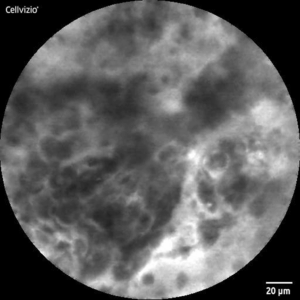

CLE-Image of healthy (l) and malignant (r) tissue

Preprocessing and Class Balancing

To improve data quality, I carefully processed the images by removing artifacts and low-quality samples and applied data augmentation to balance the classes. After this step, the final dataset was composed of 7,997 images labeled as neoplastic and 8,142 labeled as healthy tissue by a medical professional.

Deep Learning Architectures

My research involved a comparative experimentation of five Convolutional Neural Network (CNN) architectures, ranging from basic to advanced, finetuned architectures.

Basic architectures trained from scratch:

- LeNet-5: A pioneering CNN architecture with a relatively simple structure

- AlexNet: A deeper network that marked a significant advancement in CNN design

Advanced pre-trained architectures fine-tuned for CLE images:

- ResNet-34: Incorporating residual connections to address the vanishing gradient problem

- InceptionV3: Featuring multi-scale processing through parallel convolutional filters

- EfficientNetV2-S: The cutting-edge model optimized for both accuracy and computational efficiency

Evaluation Strategy

To ensure robust and clinically relevant evaluation, I implemented two complementary validation strategies:

- 5-fold cross-validation for comprehensive model assessment

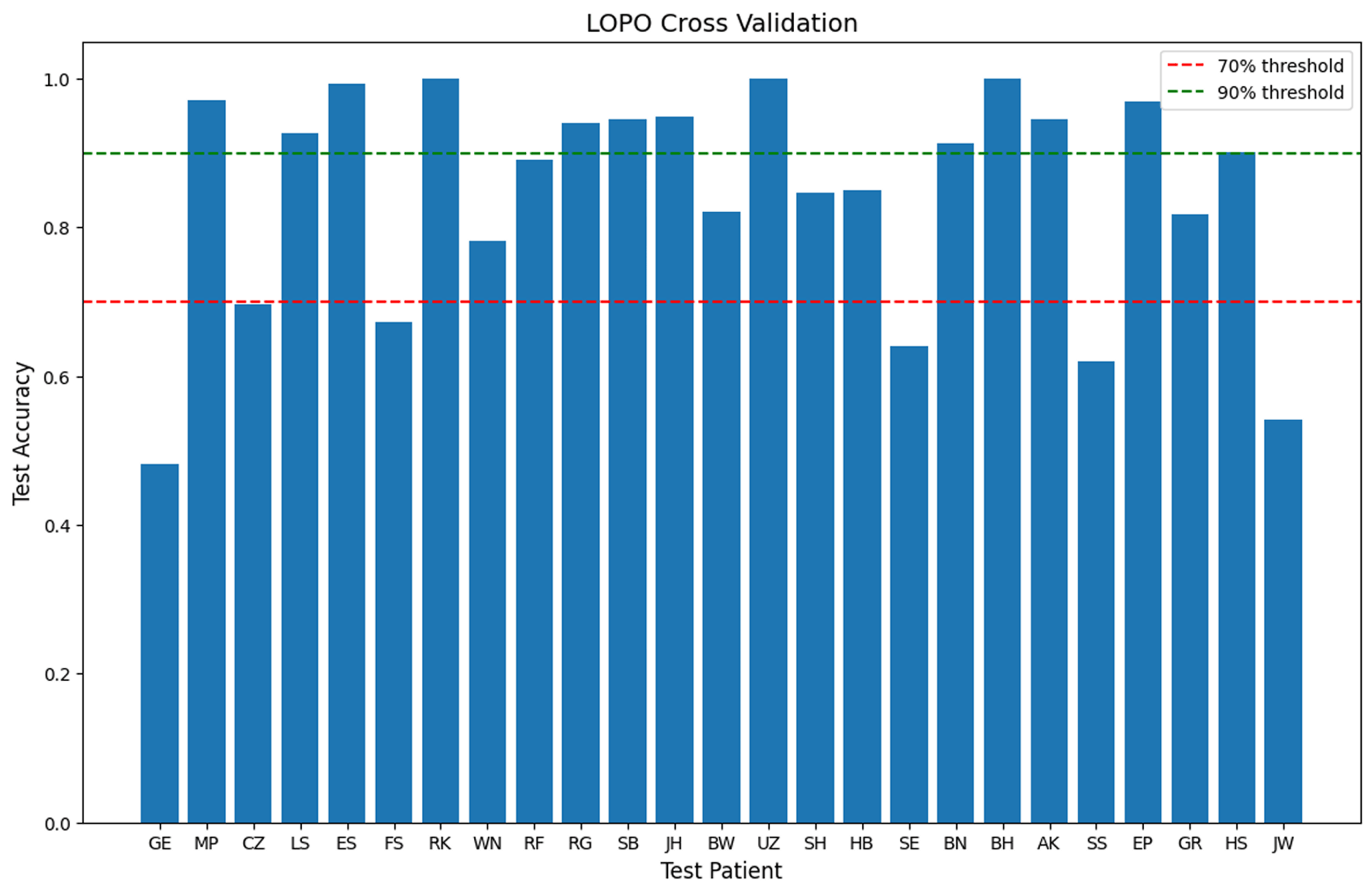

- Leave-One-Patient-Out validation to explore patient-specific challenges

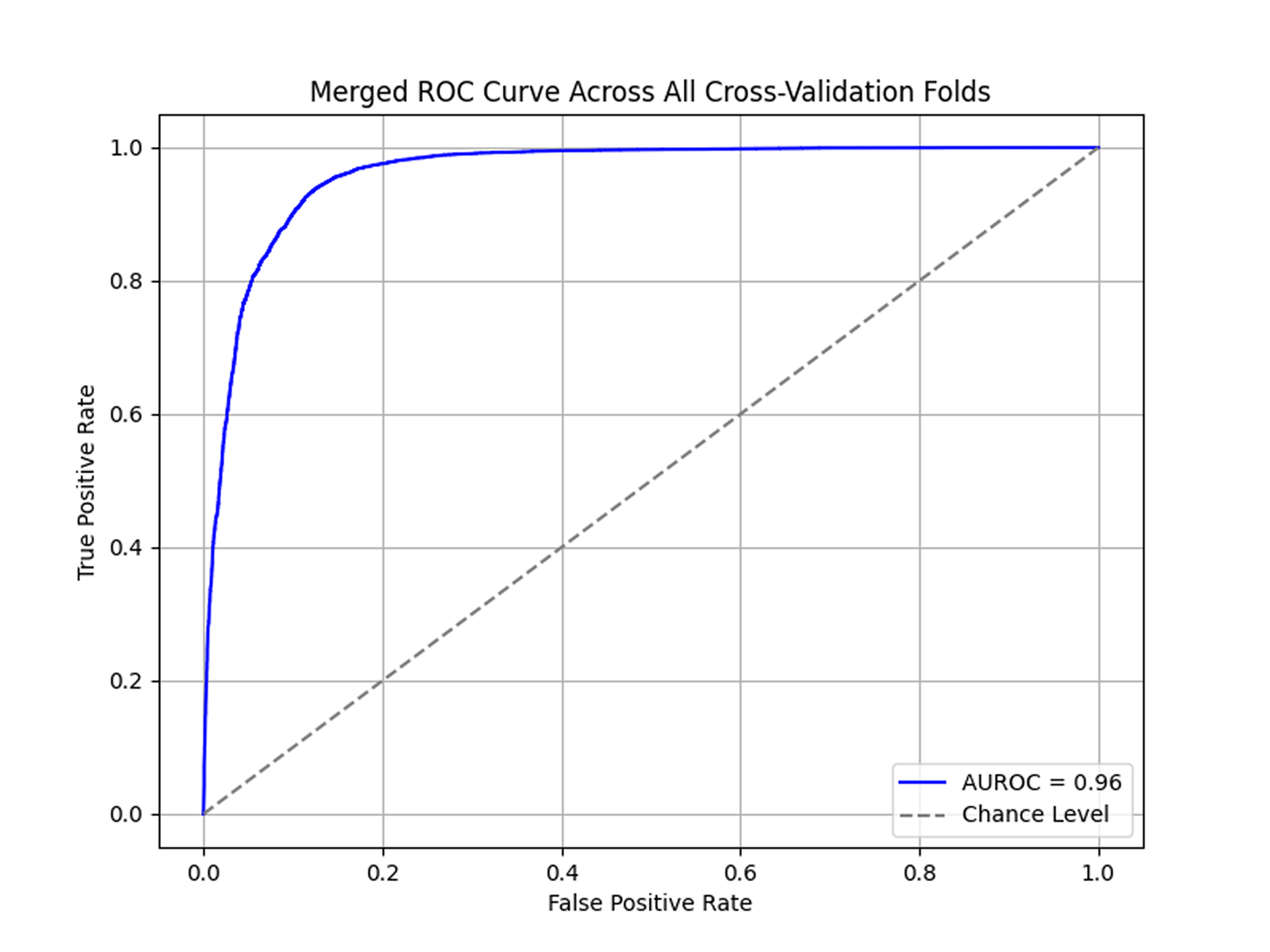

Both evaluation approaches strictly separated patient data between training and test sets, preventing any data leakage that might artificially inflate performance metrics. The models were evaluated using a comprehensive set of performance metrics including accuracy, sensitivity, specificity, F1 score, and Area Under the Receiver Operating Characteristic curve (AUROC).

To address model interpretability, I employed Class Activation Maps (CAMs) to visualize the image regions that the model considered most relevant for its predictions. These heatmaps help validate whether the AI system is actually focusing on medically meaningful structures — rather than artifacts or noise. By surfacing the model’s „thought process“, CAMs help transforming the system from a black box into a more transparent tool. This transparency is essential for building trust among clinicians and ultimately supporting adoption in real-world diagnostic workflows.

Results and Conclusion:

Among all tested models, EfficientNetV2-S delivered the strongest overall performance, achieving:

- 84.8% accuracy

- 87.0% sensitivity

- 83.6% specificity

These results were obtained through nested 5-fold cross-validation, ensuring a robust evaluation.

Notably, all transfer learning approaches (EfficientNet, InceptionV3, ResNet-34) significantly outperformed models trained from scratch (LeNet-5, AlexNet). EfficientNet stood out for showing the highest consistency with the lowest standard deviation.

To assess generalization across patients, I applied a Leave-One-Patient-Out (LOPO) cross-validation using EfficientNet. The results revealed substantial performance differences between individual patients – 13 patients achieved over 90% accuracy, while 6 patients fell below 70%.

Further interpretability analysis with Class Activation Maps (CAMs) confirmed that the model consistently identified clinically relevant tumorous regions. A confidence analysis of the model outputs revealed the model mostly makes high-confidence predictions, clustering at both ends of the probability spectrum.

CLE-Image (l) with CAM overlay (r)

Transforming CLE into a Real-Time Diagnostic Tool

This study demonstrates that established deep learning-based classification methods can be effectively applied to a novel, previously unexamined dataset from diverse anatomical regions within the head and neck.

The successful adaptation of these techniques confirms their viability in a new data context and substantiates the proof of concept.

By assisting the interpretation of these complex images, AI transforms CLE from a pure image acquisition device requiring expert interpretation into a diagnostic tool capable of providing real-time support. This advancement could significantly enhance the utility of CLE in clinical practice enabling:

- Assistance for novice endoscopists in interpreting morphological structures

- Acceleration of analysis time

- Visual guidance via CAM, making model decisions more transparent

- Guiding surgeons during the procedure

Looking ahead, several promising research avenues emerge:

- Two-stage model: first filtering out non-diagnostic images, then classifying the diagnostic images into healthy and neoplastic categories

- Advanced techniques: Tumor margin segmentation and the integration of temporal image sequences

- Human-AI collaboration: Deeper exploration of Human-AI Collaboration, including the design of interaction paradigms and the evaluation of explainability features in clinical decision-making

Recent studies suggest that AI assistance can significantly improve diagnostic accuracy and inter-observer agreement among clinicians, highlighting the potential synergistic benefits of integrating AI into clinical practice.

While the findings are promising, larger-scale validation studies with diverse datasets will be critical for clinical adoption of AI-based CLE diagnostics. Continued research should further investigate transparency and usability, ensuring that AI systems in the operating room are trustworthy and usable in real-world conditions to significantly improve head and neck cancer diagnosis.